|

|

#define | PARAM_ASSERTIONS_ENABLED_LOCK_CORE 0 |

| |

|

#define | lock_owner_id_t int8_t |

| | type to use to store the 'owner' of a lock.By default this is int8_t as it only needs to store the core number or -1, however it may be overridden if a larger type is required (e.g. for an RTOS task id)

|

| |

|

#define | LOCK_INVALID_OWNER_ID ((lock_owner_id_t)-1) |

| | marker value to use for a lock_owner_id_t which does not refer to any valid owner

|

| |

|

#define | lock_get_caller_owner_id() ((lock_owner_id_t)get_core_num()) |

| | return the owner id for the callerBy default this returns the calling core number, but may be overridden (e.g. to return an RTOS task id)

|

| |

|

#define | lock_is_owner_id_valid(id) ((id)>=0) |

| |

| #define | lock_internal_spin_unlock_with_wait(lock, save) spin_unlock((lock)->spin_lock, save), __wfe() |

| | Atomically unlock the lock's spin lock, and wait for a notification.Atomic here refers to the fact that it should not be possible for a concurrent lock_internal_spin_unlock_with_notify to insert itself between the spin unlock and this wait in a way that the wait does not see the notification (i.e. causing a missed notification). In other words this method should always wake up in response to a lock_internal_spin_unlock_with_notify for the same lock, which completes after this call starts. More...

|

| |

| #define | lock_internal_spin_unlock_with_notify(lock, save) spin_unlock((lock)->spin_lock, save), __sev() |

| | Atomically unlock the lock's spin lock, and send a notificationAtomic here refers to the fact that it should not be possible for this notification to happen during a lock_internal_spin_unlock_with_wait in a way that that wait does not see the notification (i.e. causing a missed notification). In other words this method should always wake up any lock_internal_spin_unlock_with_wait which started before this call completes. More...

|

| |

| #define | lock_internal_spin_unlock_with_best_effort_wait_or_timeout(lock, save, until) |

| | Atomically unlock the lock's spin lock, and wait for a notification or a timeoutAtomic here refers to the fact that it should not be possible for a concurrent lock_internal_spin_unlock_with_notify to insert itself between the spin unlock and this wait in a way that the wait does not see the notification (i.e. causing a missed notification). In other words this method should always wake up in response to a lock_internal_spin_unlock_with_notify for the same lock, which completes after this call starts. More...

|

| |

| #define | sync_internal_yield_until_before(until) ((void)0) |

| | yield to other processing until some time before the requested timeThis method is provided for cases where the caller has no useful work to do until the specified time. More...

|

| |

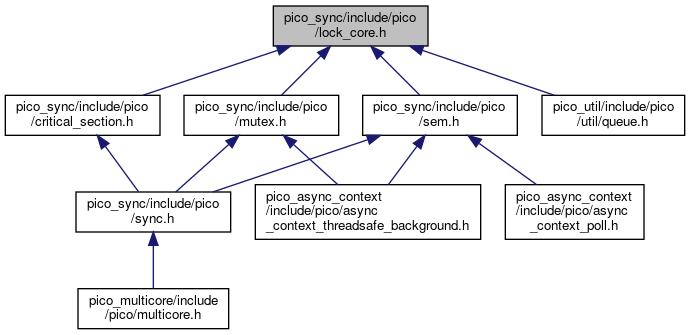

Base implementation for locking primitives protected by a spin lock. The spin lock is only used to protect access to the remaining lock state (in primitives using lock_core); it is never left locked outside of the function implementations